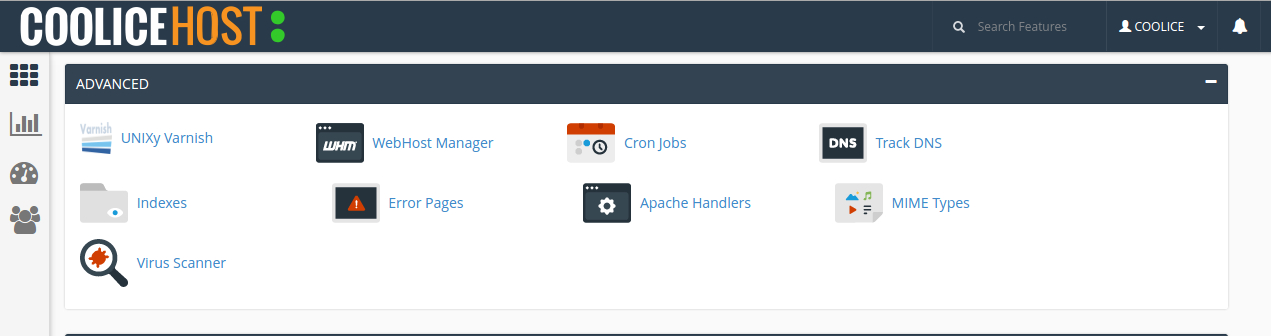

Although it is designed to be one of the best web application accelerators, it is still inevitable to commit a mistake or two when it comes to Varnish Cache. Here are the 10 most common mistakes and how you can avoid them.

1. Not Monitoring the “Nuked Counter”

Varnish Cache forcefully evicts existing objects from the cache to make room for new ones. This can be monitored via the varnishstat’s n_lru_nuked counter. Taking a look at this counter ensures availability of storage space for future objects. When the counter already displays elevated values, adding more storage space is needed.

2. Relying on Online Sources for Anything Related to Varnish Cache

While it’s good that the Internet continues to become the world’s largest library, not every information in it is considered reliable. For instance, there are a lot of blog posts that contain instructions for people who want to test various settings with Varnish Cache. However, some settings may work well for people on a local area network, yet some may cause the system to malfunction when done over the Internet.

If you are among those who do this, make sure you are carefully reading and understanding the instructions. If not, you are better off downloading the Varnish Book.

3. Not Having a Suitable Plan When Setting High TTLs

Higher TTLs mean better website speed and therefore, better experience for the user. Also, it decreases the backend load. If you are planning on a higher TTL, make sure you think of a way where you can invalidate the cache contents as they chance (e.g. Varnish Enhanced Cache Invalidation).

4. Not Applying Variations on User-Agent

When your content management system finds two users that have identical user-agent strings, your cache will automatically be rendered useless. This is because of the “Vary: User-Agent.” Normalize the string to prevent this.

5. Allowing Set Cookies in the Cache

Using Set-Cookie headers to cache an object often results to sending those cookie sets to your clients unintentionally as well. This leads to a session transfer and can have devastating effects. Avoid using return (deliver) in vcl_fetch. However, if you need to use deliver, check if you have Set-Cookie first.

6. Tinkering with Accept-Encoding

Gzip is now supported by Varnish 3.0 and later. Therefore, there is no need to manually mess with the Accept-Encoding request header. The compressed and uncompressed responses will be cached automatically.

7. Failure to Use Custom Error Messages

One of the most dreaded error responses in Varnish Cache is the “Guru Mediation,” which happens when origin servers fail and Varnish Cache cannot find a suitable object to serve to the client. This can easily be remedied by customizing the error itself via the VCL subroutine vcl_error. This way, you’ll be more in line with what you want your website to look and feel like.

For more ideas, you can take a look at www.varnish-software.com.

8. Misconfiguring Memory

Once Varnish Cache starts to get overloaded with memory, it will run the risk of losing memory in the process. This can be devastating, especially when it happens on a platform such as Linux. Users also fail to realize the fact that this system is based on a per-object memory overhead.

However, feeding too little memory to Varnish Cache results to an extremely low cache hit rate. This only spells bad experience from the users. This is a particularly important area as you would not want to be offing customers from your website due to lack of memory.

9. Misunderstanding Hit-for-Pass

There are a lot of users who do not understand how a hit-for-pass concept works, and this may lead to misconfiguration of Varnish Cache.

A hit-for-pass works like this: multiple request will enter Varnish Request. What it will do is to compile all these requests at the backend. If, for example, a Set-Cookie header is enabled, Varnish Cache automatically creates a hit-for-pass object to remind itself that requests made to this particular URL should be sent straight to the backend instead of being put on waiting list via the Set-Cookie header.

Usually, 120 seconds is the default response time for these objects. If you attempt to set it to 0, serialized access is then forced to that URL. This will cause the website to slow down.

10. Not Monitoring Sys Log

Varnish Cache is programmed to run on two separate processes.

a. Management Process

This process is responsible for keeping other various tasks and the child process running.

b. Child Process

This process is responsible for most of the heavy lifting. In the occurrence of a crash, the child process automatically starts back up, making downtime unnoticeable from the users’ end.

Monitoring the Sys Log is recommended for users to see when these processes happen. Another way is to check the varnishstat’s uptime counter which, when displays “0”, means that the child process has just activated.